Human-in-the-Loop AI: Bridging Machine Intelligence

Artificial intelligence has transformed how businesses operate, but the most powerful AI systems don't work in isolation. They thrive when human intelligence guides machine learning capabilities. This collaboration creates more accurate, ethical, and reliable AI solutions that enterprises can trust.

Human-in-the-loop (HITL) AI represents a fundamental shift from fully automated systems to collaborative intelligence. By combining human expertise with machine efficiency, organizations can build AI systems that learn continuously, make better decisions, and align with business values. This approach addresses critical challenges in AI development while ensuring human oversight remains central to intelligent systems.

Human-in-the-loop AI integrates human judgment directly into machine learning workflows. Unlike traditional automated systems that operate independently, HITL AI creates a continuous dialogue between human experts and AI models. This collaboration happens at multiple stages: data preparation, model training, validation, and ongoing optimization.

The core principle centers on leveraging human strengths where machines fall short. Humans excel at understanding context, making ethical judgments, and handling ambiguous situations. Machines process vast amounts of data quickly and identify patterns humans might miss. Together, they create more robust AI systems.

Effective HITL AI systems include several essential elements. Human oversight provides quality control and ethical guidance throughout the AI lifecycle. The AI feedback loop enables continuous improvement as human input refines model performance. Active learning algorithms identify where human expertise adds the most value, optimizing resource allocation.

Real-world applications span industries. Healthcare systems use HITL AI for medical diagnosis, where doctors validate AI recommendations before treatment decisions. Financial institutions employ human oversight for fraud detection, ensuring complex cases receive expert review. Autonomous vehicle development relies on human annotation of edge cases to improve safety systems.

Quality AI training data forms the backbone of successful machine learning systems. Human expertise becomes crucial in creating accurate, representative datasets that enable models to learn effectively. AI annotation involves humans labeling data to teach machines how to recognize patterns, classify information, and make predictions.

Data labeling encompasses various approaches depending on the use case. Supervised learning requires extensive human annotation to create labeled training sets. Semi-supervised approaches combine small amounts of labeled data with larger unlabeled datasets. Active learning strategies identify the most valuable data points for human annotation, maximizing impact while minimizing effort.

Smart organizations combine human expertise with automated tools for efficient data labeling workflows. Humans handle complex, ambiguous cases that require domain knowledge and contextual understanding. Automated systems process straightforward labeling tasks at scale. This hybrid approach optimizes both quality and efficiency.

Best practices for data labeling include clear annotation guidelines, regular quality checks, and iterative refinement processes. Multiple annotators can label the same data to ensure consistency and identify potential biases. Version control systems track changes and maintain data lineage throughout the annotation process.

Expert Insight

Organizations that invest in high-quality human annotation see 40% better model performance compared to those relying solely on automated labeling. The initial investment in human expertise pays dividends through improved accuracy and reduced downstream errors.

Human validation ensures AI models perform reliably in real-world scenarios. While automated testing catches technical errors, human reviewers identify subtle issues like bias, inappropriate responses, or contextual misunderstandings. This validation process becomes especially critical for enterprise applications where accuracy and reliability are paramount.

Model optimization through human feedback creates iterative improvement cycles. Experts review AI outputs, identify areas for enhancement, and provide targeted feedback. This information guides retraining efforts, prompt engineering improvements, and architectural adjustments. The result is AI systems that continuously evolve and improve.

Human oversight plays a vital role in detecting and correcting AI bias. Automated systems may perpetuate historical biases present in training data or develop new biases through skewed learning processes. Human reviewers can identify these issues and guide corrective actions.

Effective bias detection requires diverse review teams with different perspectives and backgrounds. Regular audits of AI outputs across different demographic groups help identify disparate impacts. When bias is detected, human experts can adjust training data, modify algorithms, or implement fairness constraints.

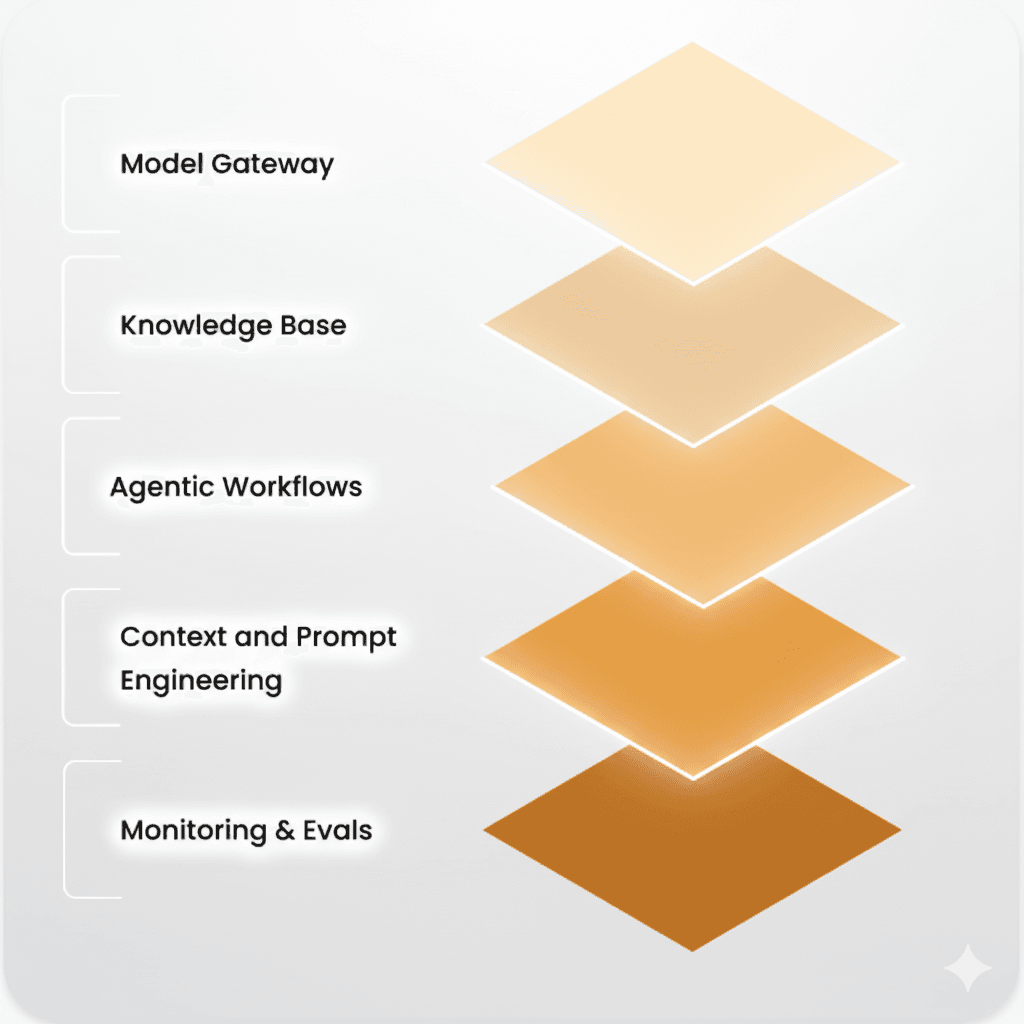

Prompt engineering has emerged as a critical skill in modern AI systems, especially those involving large language models. Human expertise in crafting effective prompts directly impacts AI performance and output quality. The iterative process of prompt refinement creates an AI feedback loop that improves both human understanding and machine responses.

Effective prompt engineering combines technical knowledge with domain expertise. Humans understand the nuances of language, context, and desired outcomes. They can craft prompts that guide AI systems toward more accurate, relevant, and useful responses. This collaboration becomes especially powerful in complex business applications.

Successful prompt engineering in HITL systems involves structured workflows that capture human insights and translate them into improved AI performance. Teams develop prompt libraries, test variations systematically, and document what works best for different scenarios. This knowledge becomes organizational assets that improve over time.

Advanced prompt engineering techniques include few-shot learning, where humans provide examples to guide AI behavior. Chain-of-thought prompting helps AI systems break down complex problems step by step. These approaches leverage human reasoning patterns to enhance machine performance.

AI ethics requires human judgment to navigate complex moral and social considerations. Automated systems cannot fully understand the ethical implications of their decisions or the broader impact on society. Human oversight ensures AI systems align with organizational values and societal expectations.

Ethical HITL implementation addresses fairness, transparency, and accountability. Human reviewers can assess whether AI decisions treat different groups equitably. They can explain AI reasoning to stakeholders and take responsibility for system outcomes. This human accountability builds trust in AI systems.

Transparency in AI systems requires human interpretation and explanation. While machines can process complex data and generate outputs, humans must translate these results into understandable insights. This translation process ensures stakeholders can trust and act on AI recommendations.

Regulatory compliance often mandates human oversight in AI systems. Industries like healthcare, finance, and transportation require human accountability for AI-driven decisions. HITL approaches naturally support these requirements by maintaining human involvement throughout the AI lifecycle.

Implementing effective HITL AI systems requires careful planning and execution. Organizations must balance automation benefits with human oversight costs. The key lies in identifying where human expertise adds the most value and designing workflows that optimize this collaboration.

Successful implementation starts with clear objectives and success metrics. Teams need to understand what they want to achieve through HITL AI and how they will measure progress. This clarity guides technology selection, team structure, and workflow design decisions.

Effective HITL AI teams combine technical expertise with domain knowledge. Data scientists develop and maintain AI models. Domain experts provide subject matter guidance and validation. Annotation specialists handle data labeling tasks. Project managers coordinate workflows and ensure quality standards.

Technology infrastructure must support seamless human-AI collaboration. Platforms should enable easy data annotation, model validation, and feedback collection. Integration with existing enterprise systems ensures HITL AI fits naturally into business processes. Cloud-agnostic solutions provide flexibility and avoid vendor lock-in.

Organizations need clear metrics to evaluate HITL AI effectiveness. Accuracy improvements, error reduction rates, and processing time savings provide quantitative measures. Qualitative benefits include improved decision confidence, better risk management, and enhanced regulatory compliance.

Cost-benefit analysis should consider both direct and indirect impacts. While human involvement increases upfront costs, the improved accuracy and reduced errors often generate significant long-term savings. Better AI performance can drive revenue growth, operational efficiency, and competitive advantage.

Human-in-the-loop AI combines machine efficiency with human judgment, creating more accurate and reliable systems. Humans provide context, ethical oversight, and domain expertise that machines cannot replicate, resulting in better real-world performance.

Quality data labeling provides accurate training examples that teach AI models to recognize patterns correctly. Poor labeling leads to inaccurate models, while high-quality human annotation significantly improves model accuracy and reliability.

HITL AI works best for high-stakes decisions, complex tasks requiring domain expertise, situations with ethical considerations, or when dealing with ambiguous data that needs human interpretation and validation.

Costs include human annotator time, expert validation, training programs, and technology platforms. However, these investments often pay for themselves through improved accuracy, reduced errors, and better business outcomes.

Prompt engineering allows humans to guide AI behavior through carefully crafted instructions. This creates a feedback loop where human expertise improves AI responses, while AI outputs help humans refine their prompting strategies.

Human-in-the-loop AI represents the future of enterprise artificial intelligence, where human expertise and machine capabilities work together to create more powerful, ethical, and reliable systems. By implementing thoughtful HITL strategies, organizations can harness AI's potential while maintaining the human oversight necessary for responsible innovation. The key lies in finding the right balance between automation and human involvement, creating systems that leverage the best of both worlds. As AI technology continues to evolve, the organizations that master human-machine collaboration will gain significant competitive advantages in their markets.

.jpg&w=3840&q=75)