AI Integration: Bridging Legacy Systems Strategically

Legacy systems power the backbone of most enterprises today. These established infrastructures handle critical business operations but often struggle to accommodate modern AI capabilities. The challenge lies not in replacing these systems entirely, but in creating strategic bridges that enable AI integration while preserving operational stability.

Enterprise leaders face a complex decision: invest heavily in complete system overhauls or find ways to enhance existing infrastructure with AI capabilities. Smart organizations choose the latter, implementing phased approaches that deliver immediate value while building toward comprehensive AI-driven transformation.

Legacy systems present unique challenges for AI adoption. These systems often operate on outdated architectures that were never designed to handle the data volumes and processing requirements of modern AI applications. Data silos create barriers between departments, while rigid structures limit the flexibility needed for AI experimentation and deployment.

The business case for AI integration with legacy systems becomes clear when considering the alternatives. Complete system replacement can cost millions and take years to implement. Meanwhile, competitors gain advantages by quickly deploying AI solutions that enhance customer experiences and operational efficiency.

Organizations encounter several obstacles when attempting AI integration with legacy infrastructure. Outdated APIs may lack the capacity to handle real-time data exchanges required by AI systems. Security protocols designed for traditional computing environments may not address the unique vulnerabilities introduced by AI components.

Data quality issues compound these challenges. Legacy databases often contain inconsistent formats, duplicate records, and incomplete information that can severely impact AI model performance. Without proper data preparation, even the most sophisticated AI algorithms will produce unreliable results.

Successful AI integration begins with comprehensive infrastructure evaluation. Organizations must map their current system architecture, identifying integration points where AI capabilities can be introduced with minimal disruption to existing operations.

Data flow analysis reveals bottlenecks and opportunities for optimization. Understanding how information moves through legacy systems helps identify the most effective points for AI intervention. This assessment should include evaluation of current API capabilities, database structures, and network capacity.

A systematic approach to compatibility assessment examines both technical and business factors. Technical evaluation focuses on system performance, data accessibility, and integration complexity. Business evaluation considers ROI potential, risk tolerance, and strategic alignment with organizational goals.

Security and compliance requirements play a crucial role in this assessment. Legacy systems may operate under strict regulatory frameworks that limit modification options. Understanding these constraints early in the planning process prevents costly delays and redesigns later.

Pro Tip

Start your AI integration journey by identifying low-risk, high-impact use cases within your legacy environment. These quick wins build confidence and demonstrate value while you develop more comprehensive integration strategies.

API-first approaches offer the most flexible path for AI integration with legacy systems. By creating secure interfaces between existing systems and AI components, organizations can introduce intelligent capabilities without modifying core infrastructure. This strategy minimizes risk while enabling rapid deployment of AI solutions.

Middleware solutions provide another effective integration path. These platforms act as translators between legacy systems and modern AI applications, handling data format conversions and protocol translations seamlessly. Popular middleware options include message brokers and data integration platforms that facilitate real-time communication.

This gradual replacement methodology allows organizations to slowly replace legacy functionality with AI-enhanced alternatives. Like a strangler fig that gradually encompasses a tree, new AI-powered components slowly take over functions from legacy systems. This approach reduces risk while enabling continuous improvement.

Microservices architecture supports this pattern by breaking down monolithic legacy applications into smaller, manageable components. Each microservice can be independently upgraded or replaced with AI-enhanced versions, creating a more flexible and maintainable system architecture.

Effective data migration strategies form the foundation of successful AI integration. Legacy data often requires extensive cleaning and normalization before it can effectively train AI models or support intelligent applications. This process involves identifying and correcting inconsistencies, removing duplicates, and standardizing formats.

Extract, Transform, Load (ETL) processes help prepare legacy data for AI consumption. However, modern AI applications often benefit from Extract, Load, Transform (ELT) approaches that leverage cloud computing power for data transformation. The choice between these approaches depends on data volume, processing requirements, and system constraints.

AI applications often require real-time access to current data for optimal performance. Implementing data synchronization mechanisms ensures that AI models work with the most recent information available. This may involve setting up data streaming pipelines or implementing change data capture systems.

Data governance frameworks become critical when integrating AI with legacy systems. These frameworks ensure data quality, security, and compliance throughout the integration process. Proper governance also helps maintain data lineage, making it easier to trace decisions back to their source data.

A structured implementation roadmap helps organizations navigate the complexity of AI integration while managing risk and resource allocation. The first phase typically focuses on low-risk, high-impact use cases that demonstrate clear business value without requiring extensive system modifications.

Predictive maintenance applications often serve as excellent starting points for AI integration. These solutions can analyze data from existing sensors and systems to predict equipment failures, reducing downtime and maintenance costs. The integration requirements are typically minimal, making implementation straightforward.

Phase two expands AI capabilities to process automation and workflow optimization. This stage may involve implementing intelligent document processing, automated customer service responses, or supply chain optimization algorithms. These applications typically require more extensive integration but deliver significant operational improvements.

Advanced analytics and decision support systems characterize the third phase of implementation. These solutions provide executives and managers with AI-powered insights that inform strategic decisions. Implementation complexity increases significantly, but the potential for competitive advantage grows proportionally.

The final phase represents full digital transformation, where AI capabilities become integral to core business processes. This stage requires comprehensive system integration and may involve significant architectural changes. However, organizations that reach this level often achieve substantial competitive advantages.

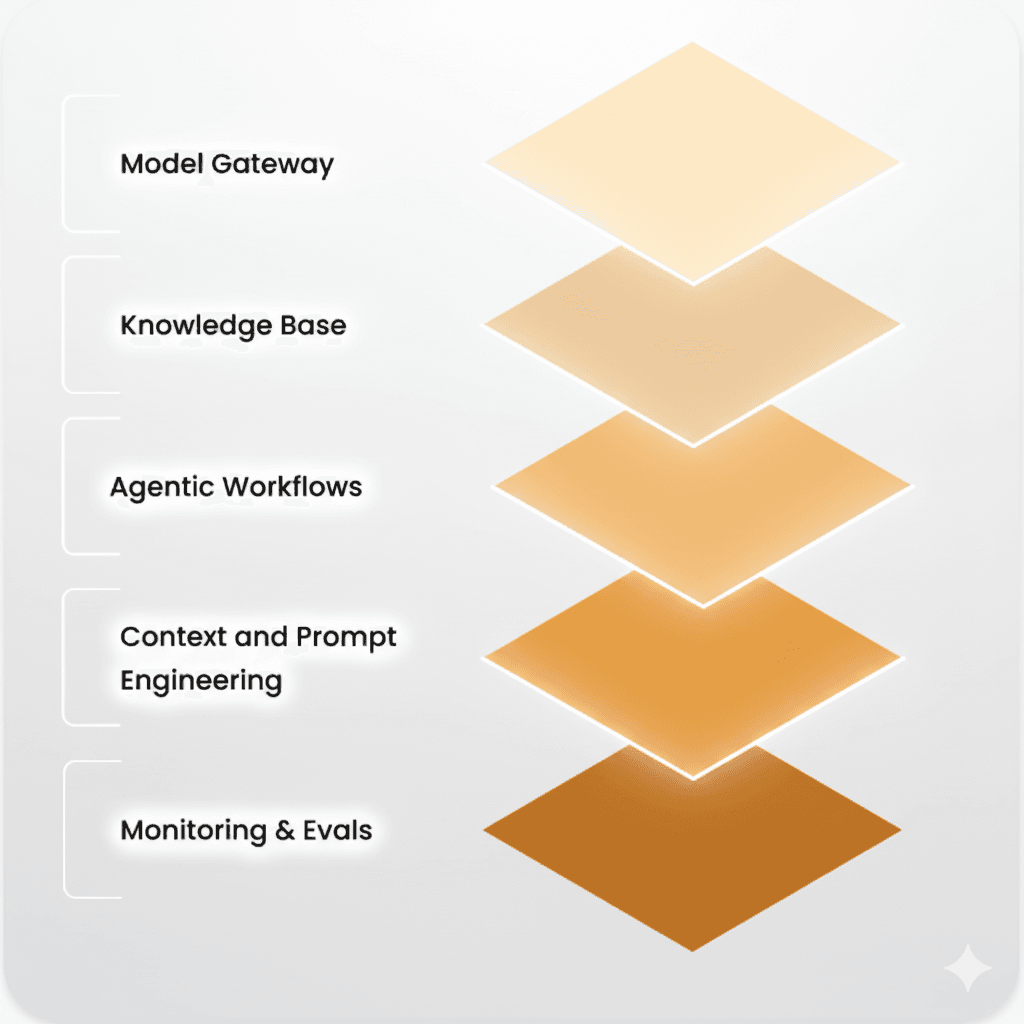

API gateways serve as critical infrastructure components for legacy-AI integration. These platforms manage authentication, rate limiting, and protocol translation between legacy systems and AI applications. Popular options include Kong, Apigee, and cloud-native solutions that provide scalability and security.

Data integration platforms facilitate the movement and transformation of information between legacy systems and AI applications. These tools handle complex data mapping, format conversion, and quality validation automatically. Leading platforms offer both on-premises and cloud deployment options to accommodate various security requirements.

Message brokers enable asynchronous communication between legacy systems and AI components. Apache Kafka, RabbitMQ, and cloud-based messaging services provide reliable, scalable communication channels that can handle high-volume data streams. These platforms ensure that system failures in one component do not cascade to others.

Robotic Process Automation (RPA) tools offer another integration approach, particularly for organizations with limited API access to legacy systems. RPA solutions can interact with legacy applications through their user interfaces, providing a bridge to AI capabilities without requiring system modifications.

Implementation timelines vary significantly based on system complexity and integration scope. Simple API-based integrations can be completed in weeks, while comprehensive transformations may require 12-18 months. Phased approaches allow organizations to realize benefits incrementally while managing implementation complexity.

Security focuses on protecting data in transit between systems, ensuring proper authentication and authorization, and maintaining compliance with existing regulatory requirements. Zero Trust architecture principles help secure AI integrations by verifying every access request and encrypting all communications.

Most legacy systems cannot directly support AI processing requirements. However, hybrid architectures that leverage cloud computing for AI processing while maintaining data in legacy systems offer effective solutions. This approach preserves existing investments while enabling AI capabilities.

Success metrics should align with business objectives and may include operational efficiency improvements, cost reductions, customer satisfaction increases, or revenue growth. Technical metrics like system uptime, data quality scores, and integration performance also provide valuable insights into project success.

Successful integration requires a combination of legacy system expertise, modern AI development skills, and integration architecture knowledge. Teams benefit from having members who understand both traditional enterprise systems and contemporary AI technologies, along with strong project management capabilities.

AI integration with legacy systems represents a strategic opportunity for enterprises to enhance their capabilities without abandoning existing investments. Success requires careful planning, phased implementation, and the right combination of technologies and expertise. Organizations that approach this challenge systematically can achieve significant competitive advantages while managing risk and controlling costs. The key lies in viewing integration not as a technical hurdle, but as a strategic enabler of digital transformation that builds upon existing strengths while embracing future possibilities.

.jpg&w=3840&q=75)