Hybrid AI Deployment: Enterprise Strategy Insights

Enterprise leaders face a critical decision point as artificial intelligence transforms from experimental technology to business imperative. The global AI market, projected to reach $500 billion by 2024, is witnessing a fundamental shift toward hybrid architectures that combine the best of cloud, on-premise, and edge computing environments.

Traditional single-deployment models no longer meet the complex demands of modern enterprises. Organizations require the scalability of cloud solutions, the security of on-premise systems, and the real-time responsiveness of edge computing—all working together seamlessly.

Hybrid AI deployment represents a strategic approach that distributes AI workloads across multiple environments based on specific business requirements, data sensitivity, and performance needs. This methodology enables enterprises to optimize costs, enhance security, and maintain operational flexibility while scaling AI initiatives from proof-of-concept to production.

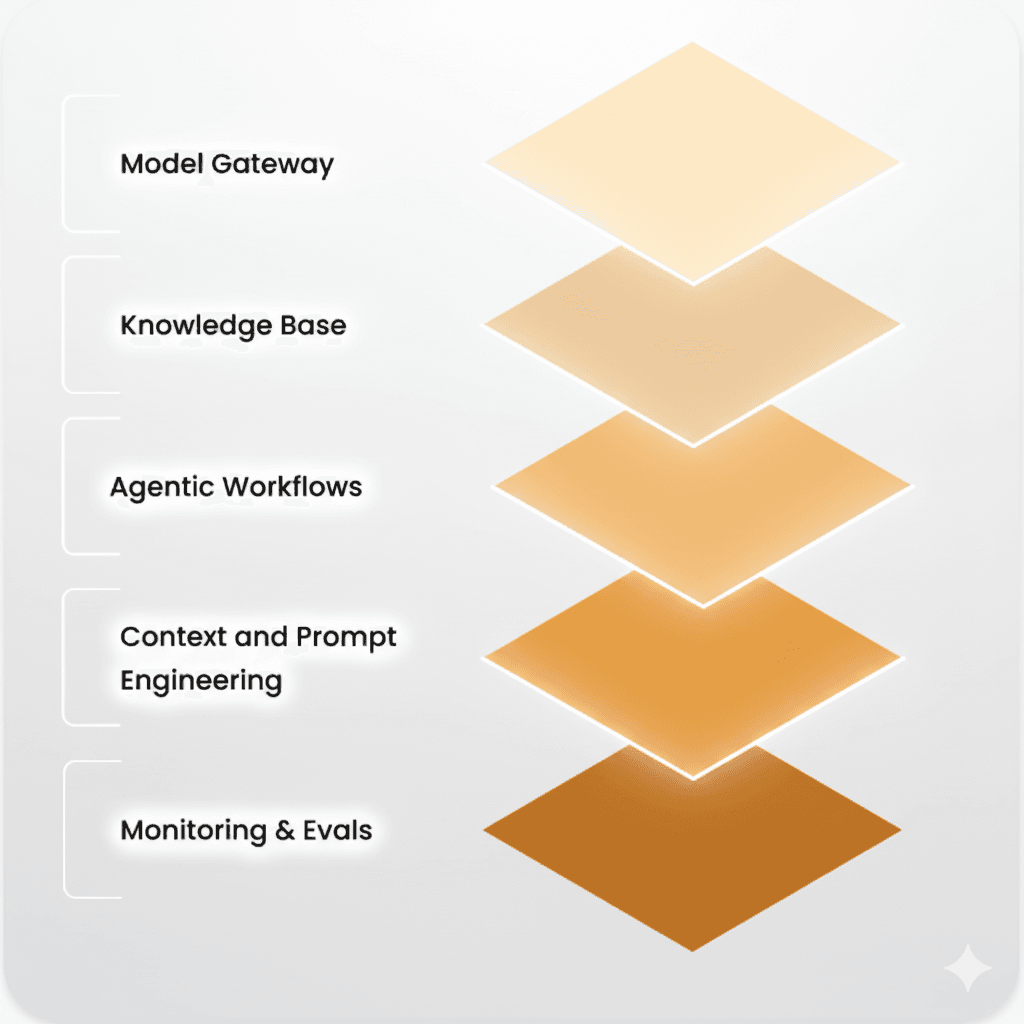

Understanding the five critical components of successful hybrid AI deployment strategy will empower your organization to navigate this complex landscape and unlock the full potential of distributed artificial intelligence systems.

Modern enterprises must evaluate three primary AI deployment models to build an effective hybrid strategy. Each model offers distinct advantages and addresses specific business requirements.

Cloud AI solutions provide unmatched scalability and access to cutting-edge technologies. Major cloud providers offer pre-trained models, extensive computing resources, and managed services that reduce infrastructure overhead. Organizations benefit from rapid deployment capabilities and pay-as-you-scale pricing models.

However, cloud deployments introduce data sovereignty concerns and potential vendor lock-in scenarios. Enterprises handling sensitive information must carefully consider compliance requirements and long-term cost implications as usage scales.

On-premise AI deployments offer maximum security and data control. Organizations maintain complete oversight of their AI infrastructure, ensuring compliance with strict regulatory requirements and protecting intellectual property.

The trade-off involves higher upfront capital investments and ongoing maintenance responsibilities. Enterprises must invest in specialized hardware, software licenses, and technical expertise to manage on-premise AI systems effectively.

Edge AI computing brings intelligence closer to data sources, enabling real-time decision-making with minimal latency. Manufacturing, healthcare, and autonomous vehicle applications particularly benefit from edge deployments.

Edge computing reduces bandwidth costs and improves system resilience by operating independently of central infrastructure. However, managing distributed edge nodes requires sophisticated orchestration capabilities and standardized deployment processes.

Expert Insight

Organizations implementing hybrid AI deployment strategies report 40% faster time-to-market for new AI applications compared to single-model approaches, according to recent enterprise surveys.

Successful hybrid AI deployment requires carefully planned AI infrastructure that supports seamless integration across multiple environments. Enterprise architects must consider hardware compatibility, network connectivity, and software standardization.

Modern enterprise AI infrastructure demands high-performance computing resources, robust networking capabilities, and flexible storage solutions. GPU clusters, specialized AI chips, and high-bandwidth connections form the foundation of effective AI deployments.

Container orchestration platforms like Kubernetes enable consistent deployment patterns across cloud, on-premise, and edge environments. This standardization simplifies management and ensures application portability.

AI model management becomes increasingly complex in hybrid environments. Organizations need centralized model repositories, version control systems, and automated deployment pipelines to maintain consistency across distributed infrastructure.

Effective model management includes experiment tracking, performance monitoring, and automated rollback capabilities. These features ensure reliable AI operations and enable rapid iteration cycles.

Hybrid AI deployments require sophisticated data pipelines that handle ingestion, processing, and storage across multiple environments. Data governance frameworks must ensure consistency, quality, and security throughout the pipeline.

Real-time data synchronization between environments enables consistent model performance and accurate analytics. Organizations must implement robust data validation and error handling mechanisms.

AI orchestration coordinates complex workflows across hybrid infrastructure, ensuring optimal resource utilization and system performance. Modern orchestration platforms automate deployment, scaling, and monitoring tasks.

Automated workflows streamline AI model deployment and management across distributed environments. Orchestration systems handle dependency management, resource allocation, and failure recovery without manual intervention.

Cross-platform coordination ensures that AI applications can leverage resources from multiple environments based on current demand and availability. This flexibility maximizes system efficiency and reduces operational costs.

Comprehensive monitoring systems track AI model performance, resource utilization, and system health across all deployment environments. Real-time analytics enable proactive optimization and issue resolution.

Performance metrics include model accuracy, inference latency, throughput, and resource consumption. These insights guide capacity planning and optimization decisions.

Hybrid deployments require sophisticated cost management strategies that account for different pricing models across cloud, on-premise, and edge environments. Dynamic resource allocation optimizes costs while maintaining performance requirements.

Automated scaling policies adjust resource allocation based on demand patterns, ensuring cost-effective operations without compromising service quality.

Distributed AI implementation requires careful planning and phased execution to minimize risks and ensure successful outcomes. Organizations should adopt proven methodologies and best practices.

Successful hybrid AI implementations begin with pilot programs that validate technical approaches and business value. Gradual scaling allows organizations to refine processes and address challenges before full-scale deployment.

Each phase should include clear success criteria, performance benchmarks, and risk mitigation strategies. This structured approach reduces implementation risks and ensures stakeholder buy-in.

Hybrid AI deployments require cross-functional teams with expertise in cloud technologies, on-premise systems, and edge computing. Clear roles and responsibilities ensure effective coordination and decision-making.

Governance frameworks establish standards for model development, deployment, and monitoring across all environments. These standards ensure consistency and compliance throughout the organization.

Choosing the right technology partners is crucial for hybrid AI success. Organizations should evaluate platforms based on integration capabilities, security features, and long-term roadmap alignment.

Vendor-agnostic approaches reduce lock-in risks and provide flexibility to adapt to changing requirements. Open standards and APIs enable seamless integration across different platforms and environments.

Effective AI deployment strategy must address security, compliance, and risk management requirements across all environments. Enterprise-grade security frameworks protect sensitive data and ensure regulatory compliance.

Comprehensive data governance policies ensure consistent handling of sensitive information across hybrid environments. Privacy protection mechanisms include data encryption, access controls, and audit trails.

Regulatory compliance requirements vary by industry and geography. Organizations must implement controls that meet the most stringent applicable standards across all deployment environments.

Multi-environment security strategies protect against diverse threat vectors. Zero-trust architectures verify every access request regardless of location or environment.

Continuous security monitoring detects and responds to threats across all deployment environments. Automated response systems minimize exposure and reduce incident response times.

Hybrid deployments enhance business continuity by distributing risks across multiple environments. Failover mechanisms ensure service availability even when individual components experience issues.

Disaster recovery planning includes backup strategies, data replication, and service restoration procedures for each environment type.

Hybrid AI deployment offers superior flexibility, cost optimization, and risk distribution compared to single-model approaches. Organizations can leverage cloud scalability for development, on-premise security for sensitive data, and edge computing for real-time applications within a unified strategy.

Data security in hybrid environments requires comprehensive encryption, access controls, and monitoring across all platforms. Zero-trust security frameworks verify every access request, while consistent governance policies ensure uniform protection standards regardless of deployment location.

Hybrid AI infrastructure costs vary based on workload distribution and usage patterns. Organizations typically see 20-30% cost savings compared to pure cloud deployments at scale, with initial setup investments offset by long-term operational efficiencies and reduced vendor lock-in risks.

Manufacturing, healthcare, financial services, and retail industries particularly benefit from hybrid AI deployment. These sectors require real-time processing, strict compliance, and data sovereignty while maintaining scalability for analytics and machine learning workloads.

Implementation timelines typically range from 6-18 months depending on organizational complexity and existing infrastructure. Phased approaches allow organizations to realize benefits incrementally while building capabilities and expertise over time.

Hybrid AI deployment represents the future of enterprise artificial intelligence, enabling organizations to optimize performance, security, and costs across diverse computing environments. Success requires careful planning, robust infrastructure, and strategic implementation approaches that balance innovation with operational excellence.

Organizations ready to embrace hybrid AI deployment can unlock new levels of flexibility and efficiency while maintaining the security and control essential for enterprise operations. The journey toward distributed AI excellence begins with understanding your unique requirements and building a comprehensive strategy that evolves with your business needs.

.jpg&w=3840&q=75)